2016 Employer Health Benefits Survey

The Kaiser Family Foundation and the Health Research & Educational Trust (Kaiser/HRET) conduct this annual survey of employer-sponsored health benefits. HRET, a nonprofit research organization, is an affiliate of the American Hospital Association. The Kaiser Family Foundation designs, analyzes, and conducts this survey in partnership with HRET, and also funds the study. Kaiser contracts with researchers at NORC at the University of Chicago (NORC) to work with the Kaiser and HRET researchers in conducting the study. Kaiser/HRET retained National Research, LLC (NR), a Washington, D.C.-based survey research firm, to conduct telephone interviews with human resource and benefits managers using the Kaiser/HRET survey instrument. From January to June 2016, NR completed full interviews with 1,933 firms.

Survey Topics

Kaiser/HRET asks each participating firm as many as 400 questions about its largest health maintenance organization (HMO), preferred provider organization (PPO), point-of-service (POS) plan, and high-deductible health plan with a savings option (HDHP/SO).1 We treat exclusive provider organizations (EPOs) and HMOs as one plan type and report the information under the banner of “HMO”; if an employer sponsors both an HMO and an EPO, they are asked about the attributes of the plan with the larger enrollment. Similarly, starting in 2013, plan information for conventional (or indemnity) plans was collected within the PPO battery. Less than 1% of firms that completed the PPO section had more enrollment in a conventional plan than in a PPO plan.

The survey includes questions on the cost of health insurance, health benefit offer rates, coverage, eligibility, enrollment patterns, premium contributions,2 employee cost sharing, prescription drug benefits, retiree health benefits, and wellness benefits.

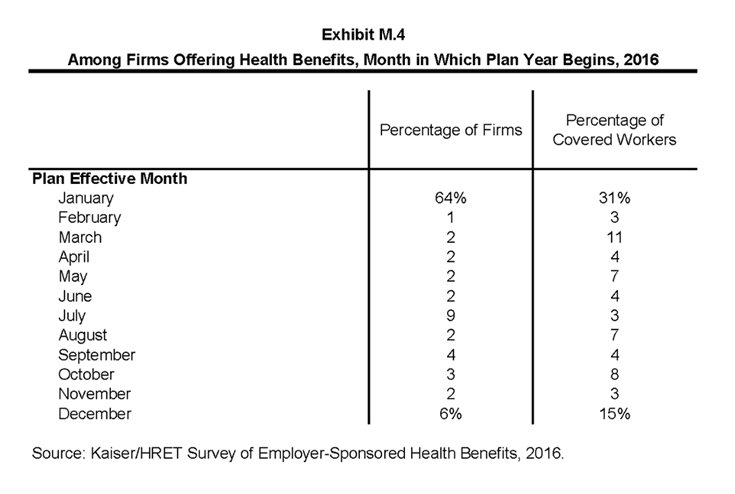

Firms are asked about the attributes of their current plans during the interview. While the survey’s fielding period begins in January, many respondents may have a plan whose 2016 plan year has not yet begun (Exhibit M.4). In some cases, plans may report the attributes of their 2015 plans and some plan attributes (such as HSA deductible limits) may not meet the calendar year regulatory requirements.

Response Rate

After determining the required sample from U.S. Census Bureau data, Kaiser/HRET drew its sample from a Survey Sampling Incorporated list (based on an original Dun and Bradstreet list) of the nation’s private employers and from the Census Bureau’s Census of Governments list of public employers with three or more workers. To increase precision, Kaiser/HRET stratified the sample by ten industry categories and six size categories. Kaiser/HRET attempted to repeat interviews with prior years’ survey respondents (with at least ten employees) who participated in either the 2014 or the 2015 survey, or both. Firms with 3-9 employees are not included in the panel to minimize the impact of panel effects on the offer rate statistic. As a result, 1,457 of the 1,933 firms that completed the full survey also participated in either the 2014 or 2015 surveys, or both.3 The overall response rate is 40%.4 To increase response rates, firms with 3–9 employees were offered an incentive of $75 in cash or as a donation to a charity of their choice to complete the full survey.

The vast majority of questions are asked only of firms that offer health benefits. A total of 1,687 of the 1,933 responding firms indicated they offered health benefits. The response rate for firms that offer health benefits is also 40%.

We asked one question of all firms in the study with which we made phone contact but where the firm declined to participate. The question was, “Does your company offer a health insurance program as a benefit to any of your employees?” A total of 3,110 firms responded to this question (including 1,933 who responded to the full survey and 1,177 who responded to this one question). These responses are included in our estimates of the percentage of firms offering health benefits.5 The response rate for this question is 65%. In 2012, the calculation of the response rates was adjusted to be slightly more conservative than previous years.

Beginning in 2014, we collected whether firms with a non-final disposition code (such as a firm that requested a callback at a later time or date) offered health benefits. By doing so we attempt to mitigate any potential non-response bias of firms either offering or not offering health benefits on the overall offer rate statistic. In 2016, 353 of the 1,173 firm responses that solely answered the offer question were obtained through this pathway.

Firm Size Categories and Key Definitions

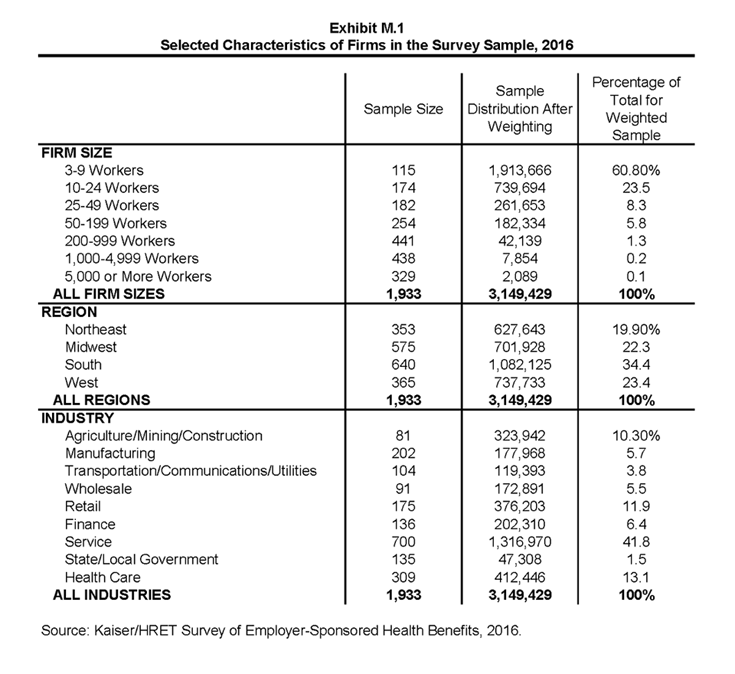

Throughout the report, exhibits categorize data by size of firm, region, and industry. Firm size definitions are as follows: small firms: 3 to 199 workers; and large firms: 200 or more workers. (Exhibit M.1) shows selected characteristics of the survey sample. A firm’s primary industry classification is determined from Survey Sampling International’s (SSI) designation on the sampling frame and is based on the U.S. Census Bureau’s North American Industry Classification System (NAICS). A firm’s ownership category and other firm characteristics used in exhibits such as 3.3 and 6.21 are based on respondents’ answers. While there is considerable overlap in firms in the “State/Local Government” industry category and those in the “public” ownership category, they are not identical. For example, public school districts are included in the service industry even though they are publicly owned.

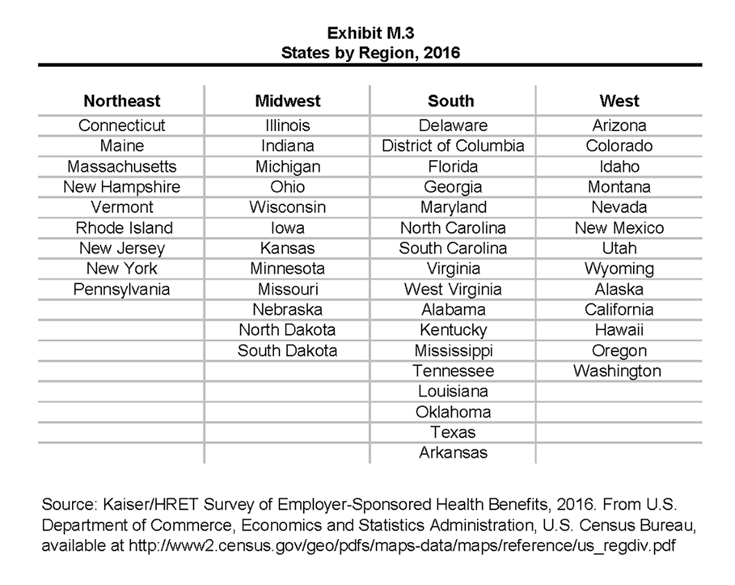

(Exhibit M.3) presents the breakdown of states into regions and is based on the U.S Census Bureau’s categorizations. State-level data are not reported both because the sample size is insufficient in many states and we only collect information on where a firm is headquartered rather than where workers are actually employed. Some mid- and large-size employers have employees in more than one state, so the location of the headquarters may not match the location of the plan for which we collected premium information.

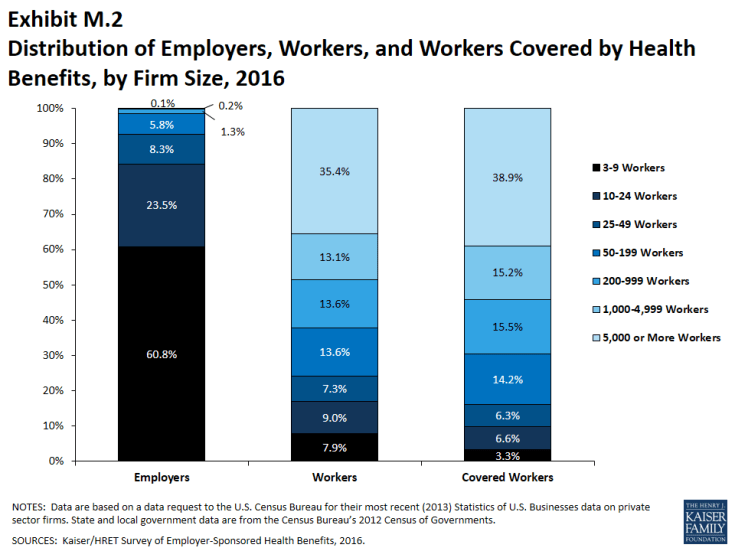

(Exhibit M.2) displays the distribution of the nation’s firms, workers, and covered workers (employees receiving coverage from their employer). Among the over three million firms nationally, approximately 60.8% employ 3 to 9 workers; such firms employ 7.9% of workers, and 3.3% of workers covered by health insurance. In contrast, less than 1% of firms employ 5,000 or more workers; these firms employ 35.4% of workers and 38.9% of covered workers. Therefore, the smallest firms dominate any statistics weighted by the number of employers. For this reason, most statistics about firms are broken out by size categories. In contrast, firms with 1,000 or more workers are the most influential employer group in calculating statistics regarding covered workers, since they employ the largest percentage of the nation’s workforce.

Exhibit M.2: Distribution of Employers, Workers, and Workers Covered by Health Benefits, by Firm Size, 2016

Throughout this report, we use the term “in-network” to refer to services received from a preferred provider. Family coverage is defined as health coverage for a family of four.

The survey asks firms what percentage of their employees earn less than a specified amount in order to identify the portion of a firm’s workforce that has relatively low wages. This year, the income threshold is $23,000 per year for lower-wage workers and $59,000 for higher-wage workers. These thresholds are based on the 25th and 75th percentile of workers’ earnings as reported by the Bureau of Labor Statistics using data from the Occupational Employment Statistics (OES) (2015).6 The cutoffs were inflation-adjusted and rounded to the nearest thousand. Prior to 2013, wage cutoffs were calculated using the now-eliminated National Compensation Survey.

Rounding and Imputation

Some exhibits in the report do not sum to totals due to rounding. In a few cases, numbers from distribution exhibits may not add to the numbers referenced in the text due to rounding. Although overall totals and totals for size and industry are statistically valid, some breakdowns may not be available due to limited sample sizes or a high relative standard error. Where the unweighted sample size is fewer than 30 observations, exhibits include the notation “NSD” (Not Sufficient Data). Many breakouts by subsets may have a large standard error, meaning that even large differences are not statistically different.

To control for item nonresponse bias, Kaiser/HRET imputes values that are missing for most variables in the survey. On average, 6% of observations are imputed. All variables are imputed following a hotdeck approach. The hotdeck approach replaces missing information with observed values from a firm similar in size and industry to the firm for which data are missing. In 2016, there were 12 variables where the imputation rate exceeded 20%; most of these cases were for individual plan level statistics – when aggregate variables were constructed for all of the plans, the imputation rate is usually much lower. There are a few variables that Kaiser/HRET has decided not to impute; these are typically variables where “don’t know” is considered a valid response option (for example, firms’ opinions about the effectiveness of incentives to encourage worker participation in health and wellness programs). In addition, there are several variables in which missing data are calculated based on respondents’ answers to other questions (for example, employer contributions to premiums are calculated from the respondent’s premium and the worker contribution to premiums).

Starting in 2012, the method to calculate missing premiums and contributions was revised; if a firm provides a premium for single coverage or family coverage, or a worker contribution for single coverage or family coverage, that information is used in the imputation. For example, if a firm provided a worker contribution for family coverage but no premium information, a ratio between the family premium and family contribution was imputed and then the family premium was calculated. In addition, in cases where premiums or contributions for both family and single coverage were missing, the hotdeck procedure was revised to draw all four responses from a single firm. The change in the imputation method did not have a significant impact on the premium or contribution estimates.

Starting in 2014, we estimate separate single and family coverage premiums for firms that provide premium amounts as the average cost for all covered workers, instead of differentiating between single and family coverage. This method more accurately accounts for the portion that each type of coverage contributes to the total cost for the 0.4% of covered workers who are enrolled at firms affected by this adjustment.

Sample Design

We determined the sample requirements based on the universe of firms obtained from the U.S. Census Bureau. Prior to the 2010 survey, the sample requirements were based on the total counts provided by Survey Sampling Incorporated (SSI) (which obtains data from Dun and Bradstreet). Over the years, we found the Dun and Bradstreet frequency counts to be volatile due to duplicate listings of firms, or firms that are no longer in business. These inaccuracies vary by firm size and industry. In 2003, we began using the more consistent and accurate counts provided by the Census Bureau’s Statistics of U.S. Businesses and the Census of Governments as the basis for post-stratification, although the sample was still drawn from a Dun and Bradstreet list. In order to further address this concern at the time of sampling, starting in 2009, we use Census Bureau data to determine the number of firms to attempt to interview within each size and industry category.

Starting in 2010, we defined Education as a separate sampling category for the purposes of sampling, rather than as a subgroup of the Service category. In the past, Education firms were a disproportionately large share of Service firms. Education is controlled for during post-stratification, and adjusting the sampling frame to also control for Education allows for a more accurate representation of both the Education and Service industries.

In past years, both private and government firms were sampled from the Dun and Bradstreet database. Beginning in 2009, Government firms were sampled from the 2007 Census of Governments. This change was made to eliminate the overlap of state agencies that were frequently sampled from the Dun and Bradstreet database. The sample of private firms is screened for firms that are related to state/local governments, and if these firms are identified in the Census of Governments, they are reclassified as government firms and a private firm is randomly drawn to replace the reclassified firm. The federal government is not included in the sample frame.

Finally, the data used to determine the 2016 Employer Health Benefits Survey sample frame include the U.S. Census’ 2012 Statistics of U.S. Businesses and the 2012 Census of Governments. At the time of the sample design (December 2015), these data represented the most current information on the number of public and private firms nationwide with three or more workers. As in the past, the post-stratification is based on the most up-to-date Census data available (the 2013 update to the Census of U.S. Businesses was purchased during the survey fielding period).

Weighting and Statistical Significance

Because Kaiser/HRET selects firms randomly, it is possible through the use of statistical weights to extrapolate the results to national (as well as firm size, regional, and industry) averages. These weights allow us to present findings based on the number of workers covered by health plans, the number of total workers, and the number of firms. In general, findings in dollar amounts (such as premiums, worker contributions, and cost sharing) are weighted by covered workers. Other estimates, such as the offer rate, are weighted by firms. Specific weights were created to analyze the HDHP/SO plans that are offered with a Health Reimbursement Arrangement (HRA) or that are Health Savings Account (HSA)-qualified. These weights represent the proportion of employees enrolled in each of these arrangements.

Calculation of the weights follows a common approach. We trimmed the weights in order to reduce the influence of weight outliers. First, we grouped firms into size and offer categories of observations. Within each strata, we identified the median and the interquartile range of the weights and calculated the trimming cut point as the median plus six times the interquartile range (M + [6 * IQR]). Weight values larger than this cut point are trimmed to the cut point. In all instances, very few weight values were trimmed. Finally, we calibrated the weights to U.S. Census Bureau’s 2013 Statistics of U.S. Businesses for firms in the private sector, and the 2012 Census of Governments as the basis for calibration / post-stratification for public sector firms. Historic employer-weighted statistics were updated in 2011.

We conducted a follow-up survey of those firms with 3 to 49 workers that refused to participate in the full survey and conducted a McNemar test to verify that the results of the follow-up survey are comparable to the results from the original survey.

Between 2006 and 2012, only limited information was collected on conventional plans. Starting in 2013, information on conventional plans is collected under the PPO section and therefore, the covered worker weight is representative of all plan types for which the survey collects information.

The survey contains a few questions on employee cost sharing that are asked only of firms that indicate in a previous question that they have a certain cost-sharing provision. For example, copayment amounts for physician office visits are asked only of those that report they have copayments for such visits. Because the composite variables (using data from across all plan types) are reflective of only those plans with the provision, separate weights for the relevant variables were created in order to account for the fact that not all covered workers have such provisions.

To account for design effects, the statistical computing package R and the library package “survey” were used to calculate standard errors.7,8 All statistical tests are performed at the .05 confidence level, unless otherwise noted. For figures with multiple years, statistical tests are conducted for each year against the previous year shown, unless otherwise noted. No statistical tests are conducted for years prior to 1999. In 2012, the method to test the difference between distributions across years was changed to use a Wald test, which accounts for the complex survey design. In general, this method is more conservative than the approach used in prior years.

Statistical tests for a given subgroup (firms with 25-49 workers, for instance) are tested against all other firm sizes not included in that subgroup (all firm sizes NOT including firms with 25-49 workers, in this example). Tests are done similarly for region and industry; for example, Northeast is compared to all firms NOT in the Northeast (an aggregate of firms in the Midwest, South, and West). However, statistical tests for estimates compared across plan types (for example, average premiums in PPOs) are tested against the “All Plans” estimate. In some cases, we also test plan-specific estimates against similar estimates for other plan types (for example, single and family premiums for HDHP/SOs against single and family premiums for HMO, PPO, and POS plans); these are noted specifically in the text. The two types of statistical tests performed are the t-test and the Wald test. The small number of observations for some variables resulted in large variability around the point estimates. These observations sometimes carry large weights, primarily for small firms. The reader should be cautioned that these influential weights may result in large movements in point estimates from year to year; however, these movements are often not statistically significant.

2016 Survey

Between 2015 and 2016, we conducted a series of focus groups that led us to the conclusion that human resource and benefit managers at firms with between 20 and 49 employees think about health insurance premiums more similarly to benefit managers at smaller firms than larger firms. Therefore, starting in 2016, we altered the health insurance premium question pathway for firms with between 20-49 employees to match that of firms with 3-19 employees rather than firms with 50 or more employees. This change affected firms representing 8% of the total covered worker weight. We believe that these questions produce comparable responses and that this edit does not create a break in trend.

Firms with 50 or more workers were asked: “Does your firm offer health benefits for current employees through a private or corporate exchange?” Employers were still asked for plan information about their HMO, PPO, POS and HDHP/SO plan regardless of whether they purchased health benefits through a private exchange or not.

Starting in 2015, employers were asked how many full-time equivalent workers (FTEs) they employed. In cases in which the number of full-time equivalents was relevant to the question, interviewer skip patterns may have depended on the number of FTEs. In 2016, questions were added to ask firms to estimate the number of hours that a typical part-time worker averaged over the course of one week in order to more accurately determine which firms might be subject to the Employer Shared Responsibility Provision of the Affordable Care Act. In cases where a firm did not know how many FTEs it employed, we calculated the number based on the number of part-time hours the firm reported. In all cases, we assumed that firms with more than 250 full time employees had more than 50 FTES.

Starting in 2016, we made significant revisions to how the survey asks employers about their prescription drug coverage. In most cases, information reported in Prescription Drug Benefits (Section 9) is not comparable with previous years’ findings. First, in addition to the four standard tiers of drugs (generics, preferred, non-preferred, and lifestyle), we began asking firms about cost sharing for a drug tier that covers only specialty drugs. This new tier pathway in the questionnaire has an effect on the trend of the four standard tiers, since respondents to the 2015 survey might have previously categorized their specialty drug tier as one of the other four standard tiers. We did not modify the question about the number of tiers a firm’s cost-sharing structure has, but in cases in which the highest tier covered exclusively specialty drugs we reported it separately. For example, in Exhibits 9.3 and 9.4, a firm with three tiers may only have copays or coinsurances for two tiers because their third tier copay or coinsurance is being reported as a specialty tier. Furthermore, in order to reduce survey burden, firms were asked about the plan attributes of only their plan type with the most enrollment. Therefore, in most cases, we no longer make comparisons between plan types. Lastly, prior to 2016, we required firms’ cost sharing tiers to be sequential, meaning that the second tier copay was higher than the first tier, the third tier was higher than the second, and the fourth was higher than the third. As drug formularies have become more intricate, many firms have minimum and maximums attached to their copays and coinsurances, leading us to believe it was no longer appropriate to assume that a firm’s cost sharing followed this sequential logic.

In cases where a firm had multiple plans, they were asked about their strategies for containing the cost of specialty drugs for the plan type with the largest enrollment. Between 2015 and 2016, we modified the series of ‘Select All That Apply’ questions regarding cost containment strategies for specialty drugs. In 2016, we elected to impute firms’ responses to these questions. We removed the option “Separate cost sharing tier for specialty drugs” and added specialty drugs as their own drug tier questionnaire pathway. We added question options on mail order drugs and prior authorization.

We discovered that the HRA and HSA distribution cutoff thresholds presented in prior years’ High Deductible Health Plan Section (Section 8) were calculated using each firm’s covered worker weight rather than the HRA- or HSA-specific enrollment weights. Starting in 2016, the means and their subsequent distributions are now calculated using these plan-specific enrollment weights and therefore those thresholds are not directly comparable to prior-year statistics.

In our 2015 calculation of out-of-pocket (OOP) maximums, we mistakenly included plans in our calculations with $0 OOP maximums, representing 2.4% the total of covered worker weight, which pushed the distribution downward in 2015 Exhibit 7.31. In the same 2016 exhibit (7.36), firms with $0 OOP maximums have been excluded.

Twenty-five firms reported allowing flexible spending account (FSA) employee contributions above the legal limit of $2,550 in 2016. Although these firms were asked to confirm that their maximum contributions were above $2,550, we nonetheless recoded their responses to the legal ceiling of $2,550 and intend to provide additional clarification that we are interested in only a firm’s health FSA in the future.

In 2016, we modified our questions about telemedicine to clarify that we were interested in the provision of health care services, and not merely the exchange of information, through telecommunication. We also added dependent and spousal questions to our health risk assessment question pathway.

In 2016, we ceased publication of the slide “Percentage of Firms Offering Health Benefits, by Firm Characteristics” (Exhibit 2.4 in the 2015 EHBS report). Since firm characteristics are not collected from respondents that solely answer the offer question, this exhibit had been calculated using the employer weight derived from only firms that had completed the full survey.

Annual inflation estimates are usually calculated from April to April. The 12 month percentage change for May to May was 1%.9

Historical Data

Data in this report focus primarily on findings from surveys jointly authored by the Kaiser Family Foundation and the Health Research & Educational Trust, which have been conducted since 1999. Prior to 1999, the survey was conducted by the Health Insurance Association of America (HIAA) and KPMG using a similar survey instrument, but data are not available for all the intervening years. Following the survey’s introduction in 1987, the HIAA conducted the survey through 1990, but some data are not available for analysis. KPMG conducted the survey from 1991-1998. However, in 1991, 1992, 1994, and 1997, only larger firms were sampled. In 1993, 1995, 1996, and 1998, KPMG interviewed both large and small firms. In 1998, KPMG divested itself of its Compensation and Benefits Practice, and part of that divestiture included donating the annual survey of health benefits to HRET.

This report uses historical data from the 1993, 1996, and 1998 KPMG Surveys of Employer-Sponsored Health Benefits and the 1999-2015 Kaiser/HRET Survey of Employer-Sponsored Health Benefits. For a longer-term perspective, we also use the 1988 survey of the nation’s employers conducted by the HIAA, on which the KPMG and Kaiser/HRET surveys are based. The survey designs for the three surveys are similar.